- Andrew Hagedorn

- Articles

- Scaling Browser Interaction Tests

Scaling Browser Interaction Tests

Browser interaction tests are a double edged sword. They are the closest automated test to how a user will actually interact with your website so they are also the closest you can be to ensuring that your website actually works. And depending on your framework you can potentially run each of your tests across multiple browsers. When combined with some form of screen shot testing to prevent visual regressions the only way to be more confident is to manually QA every user flow continuously.

The flip side is that they are also the most expensive class of automated test you can run. They are much more vulnerable to becoming flakey due to the impact of network lag, server performance, and peculiarities with how each framework interacts with a given browser. And scaling these solutions (i.e. to 100s or 1000s of tests) is a delicate trade off between time to run the tests and cost.

In my experience the protection provided by browser interaction tests has been invaluable and over the years I have either worked on or observed some patterns for scaling both Selenium and Cypress interaction tests.

Selenium

At an incredibly high level, running tests at scale with selenium has a couple of pieces:

- The application under test

- A test running framework like NUnit, JUnit, or Jest

- WebDriver which is a layer that interacts with browser specific drivers to perform actions in the browser

- Selenium Grid which is a server that can proxy commands from WebDriver to any number of remote browser instances

Scaling selenium to run many tests quickly is as simple as adding more remote browser instances. Yes. Simple.

Homegrown Solution

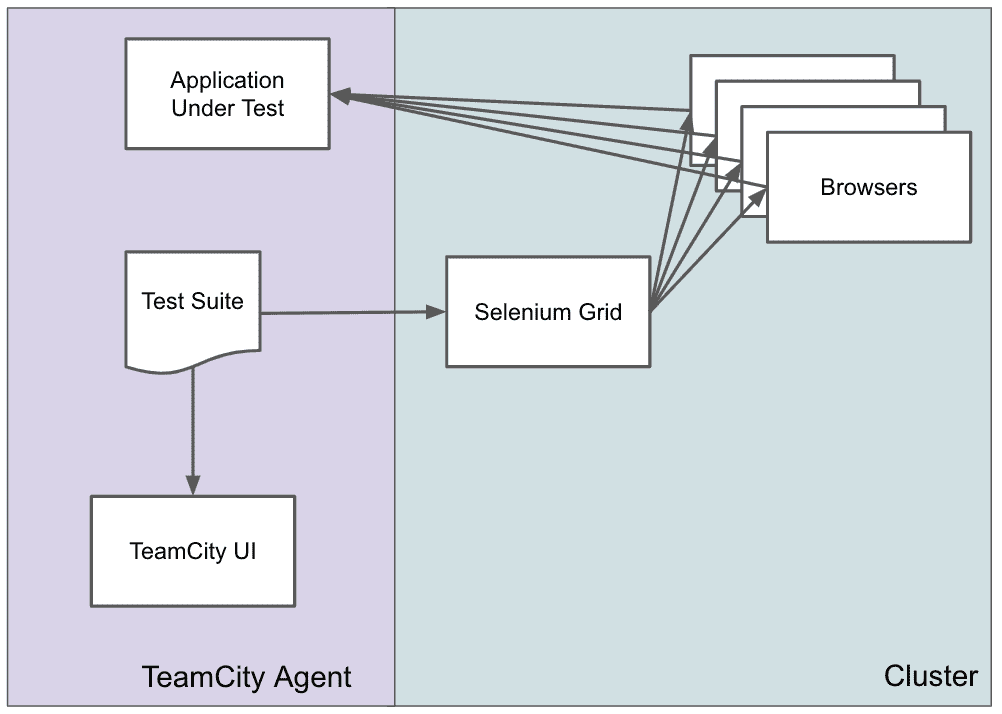

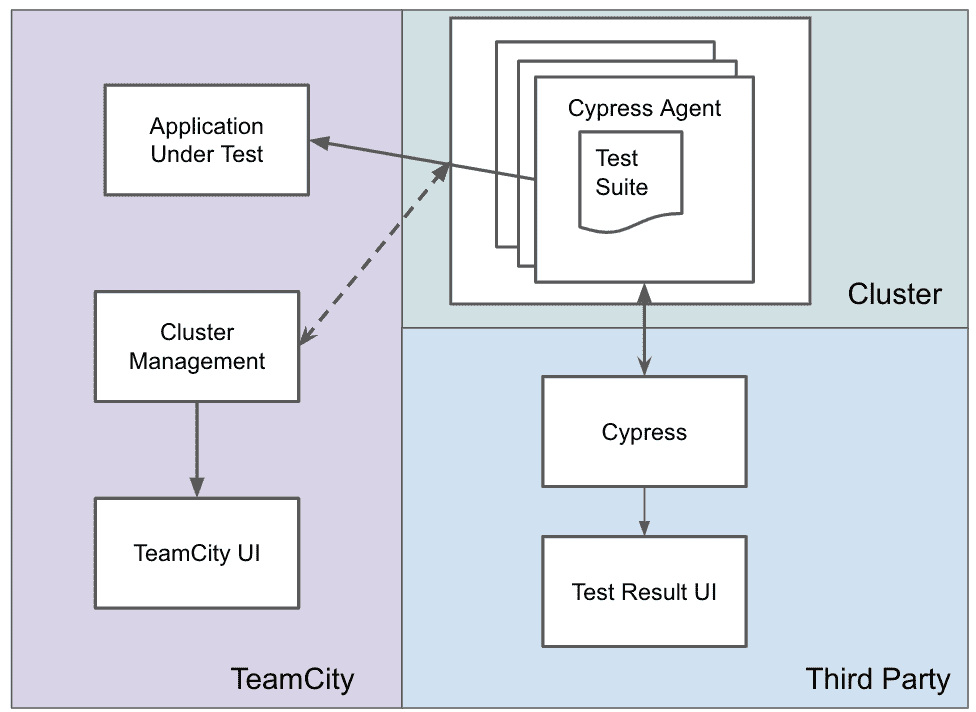

The early 2010s were when I first started aggressively testing with selenium. This was before many of third party providers that are available today were prominent (or in some cases even existed) so the only option was to roll your own setup. At Zocdoc we had an internal team dedicated to scaling the infrastructure for running tests against our monolithic codebase. As years passed we eventually settled on a docker based solution in which each test run created new resources and dumped them in a cluster to run tests:

With some docker containers, a fair bit of ansible, and an available cluster this can run a large number of tests in a reasonable amount of time. In my experience the amount of time is largly determined by the amount of test parallelization your test application can withstand and the amount of money you are willing to spend to reduce your CI time. Given enough money you can scale up your test server and browser count or shard your test suite and run multiple parallel instances to effectively run thousands of test.

With enough time and effort you can also build in a lot of features for debugging like persisting HAR files, test videos, and screen shots of the failure state of tests. And while selenium and our particular test runner have worked effectively for years now, it has a couple of major drawbacks: test maintainence and the effort to add new features. For instance, adding in IE testing was a goal at one point, but the effort to make this stable ended up not making it worth it.

Third Party

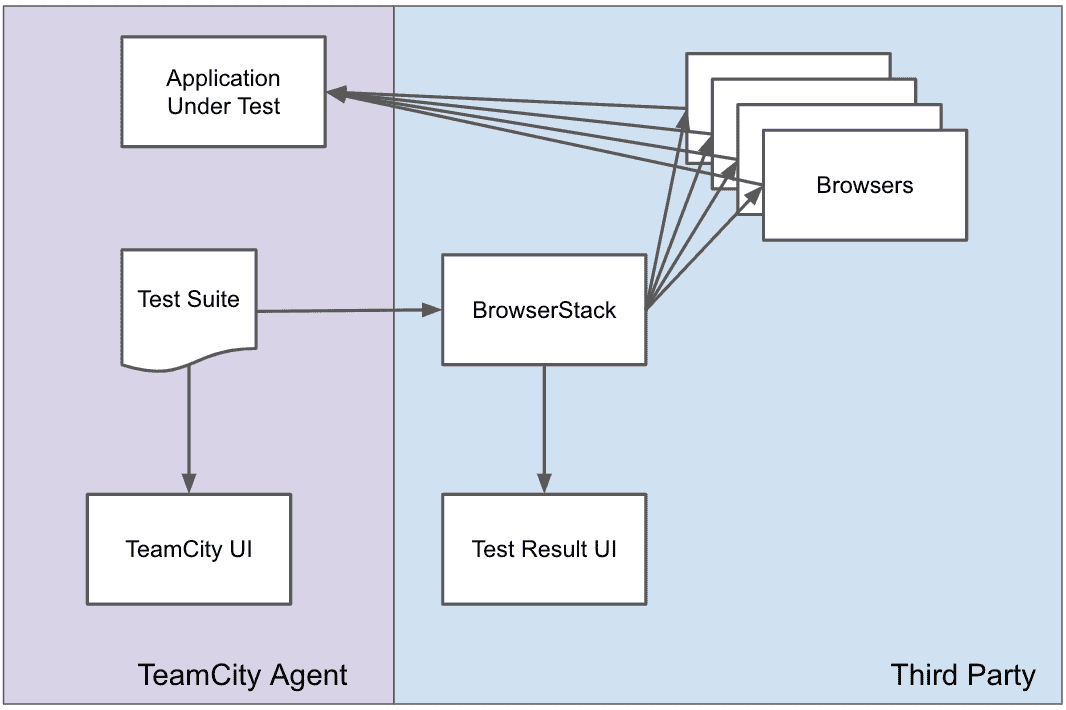

By 2016 or 2017 for better or worse we had started moving some of our web applications out of the monolithic codebase and rewriting them in React. To perform integration testing we could have tried to replicate the monolith's selenium grid, but we wanted to see if we could replace the majority of the complexity by using Browserstack. In this setup, you pay for a total amount of parallel test runners and delegate all the test running to Browserstack. They might not be using Selenium Grid, but regardless from the perspective of the test runner it operates the same:

In our case we were using Webdriver io as the testing framework and scaling was simply a function of paying more money for more test runners. And you pay.

Using Browserstack allowed us to offload the effort of maintaining the test running infrastructure while also maintaining most of the debugging tools we were used to. It also allowed us to experiment with IE tests with no implementation cost other than specifying a new value in the configuration. And while we ultimitely decided they were not worth our time we never would have had that learning in our homegrown infrastructure.

Unfortunately, all the pros of not maintaining the infrastructure were outweighed by the negatives we experienced with Browserstack. While techically available, debugging tools like videos were so slow as to be useless. And more importantly having the application in one datacenter and the test runners somewhere else meant that the network caused much more test flakiness than we were accustomed to. The only way to battle this was increasingly adding arbitrary waits into your test code to the point that our run times increased significantly.

Cypress

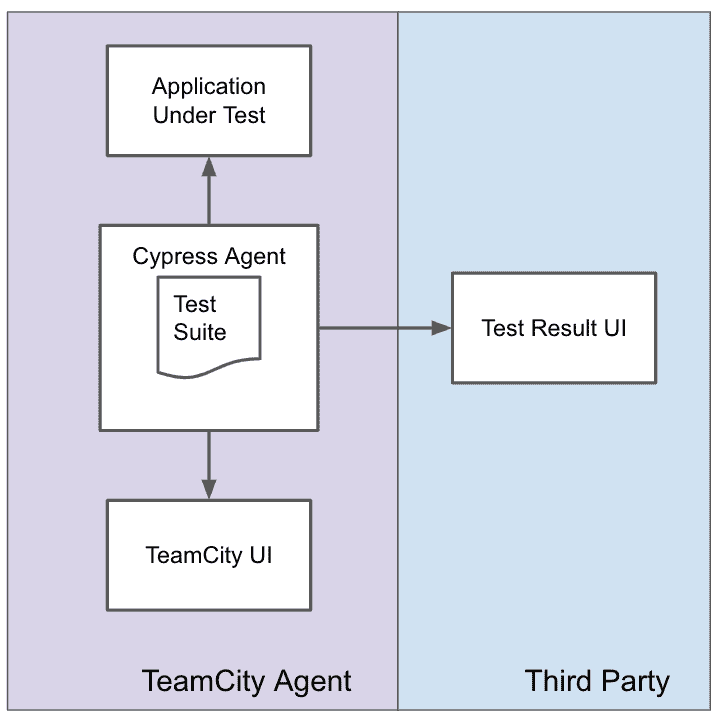

Cypress is structured fundamentally differently than Selenium. Whereas, selenium uses its drivers to remotely control actual browser instances, Cypress combines the browser and test runner into a single process. The net effect is that Cypress is running your tests on a close approximation of a browser rather than the real thing. This may or may not be a deal breaker for you; in my experience it has been close enough. Moreover, the test runner is likely different between development and CI. In development you interact with a Chrome based test runner, but in CI it uses a headless Electron version. In practice this means you may end up in a situation in which a test is passing on your local development machine and not in CI.

The combination of the test runner and browser means that the simplest configuration in CI is a running a single instance on your machine:

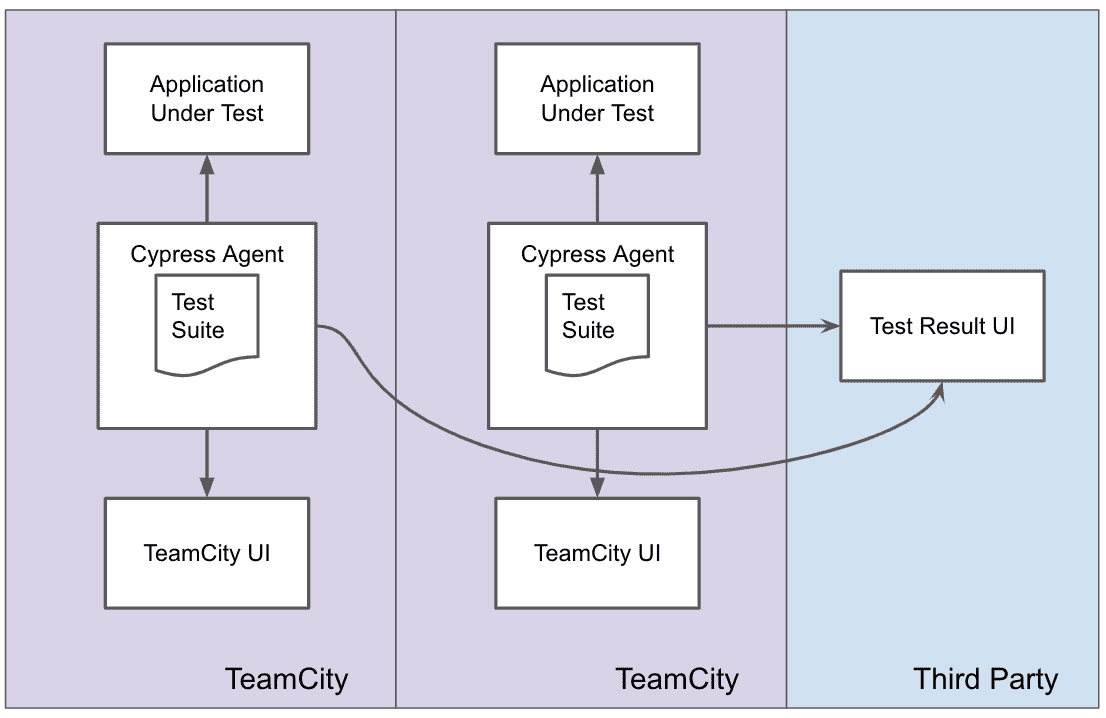

Based on the selenium approach you might assume that an easy way to parallize is to add more test runners on a single machine:

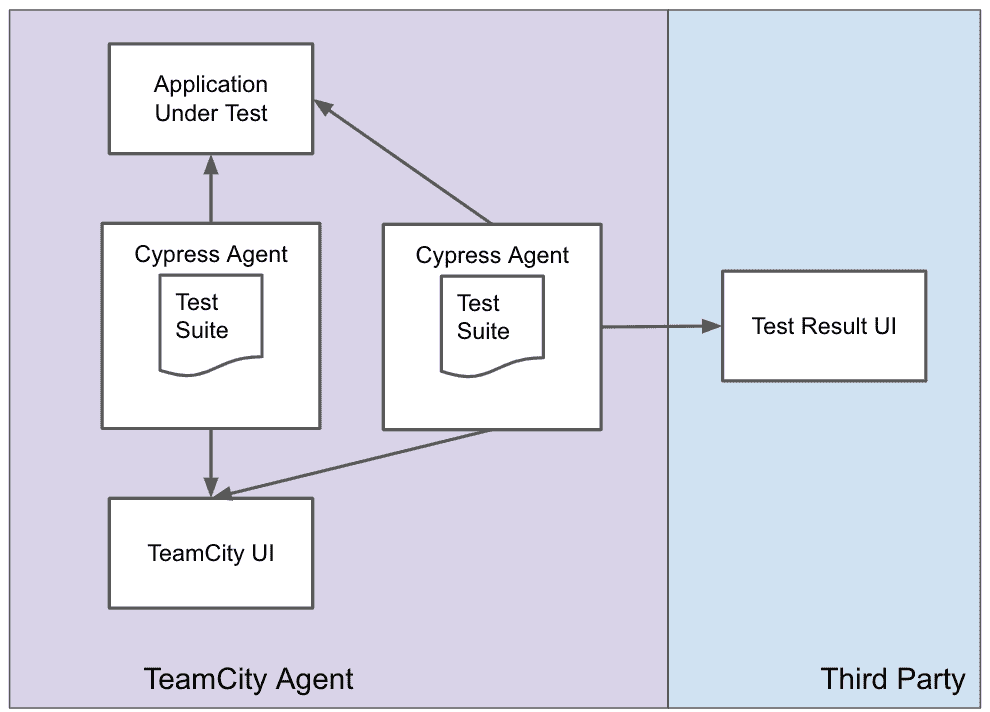

This is a bad idea; the test runner is incredibly resource intensive and in my experience running multiple copies on a single CI instance actually increases the amount of time required to run the test suite. Instead your only course of action is to have multiple machines running multiple test runners. One strategy is to just run multiple CI agents with the same copy of the code:

So long as you pass a common id to the cypress runner on each agent (and pay cypress.io) Cypress will figure out the test scheduling so that the tests are run in parallel. Since your limiting factor in CI across the board is likely the number of agents you pay for a less blocking solution is to go back to the future and offload the runners into a cluster:

While this technique can speed up your tests runs and scale Cypress in CI to hundreds of tests, it is definitely more cost intensive than scaling the same number of selenium tests. Overall, while I am very happy with Cypress in development, I am less satisfied with it in CI.

Conclusion

While browser interaction tests can be very valuable for catching defects prior to releasing code to your users, scaling them is not for the faint of heart. If you are able to pay there are short cuts, but even then you are likely to run into headaches.

Other Posts

Technology

- React SSR at Scale

- TravisCI, TeamCity, and Kotlin

- The Good and the Bad of Cypress

- Scaling Browser Interaction Tests

- Exploring Github Actions

- Scope and Impact

- Microservices: Back to the Future